Tutorial 6: Network¶

Overview¶

In this tutorial we are going to cover:

Network Scope¶

Network is one of the three main FastestEstimator APIs that defines not only a neural network model but also all of the operations to be performed on it. This can include the deep-learning model itself, loss calculations, model updating rules, and any other functionality that you wish to execute within a GPU.

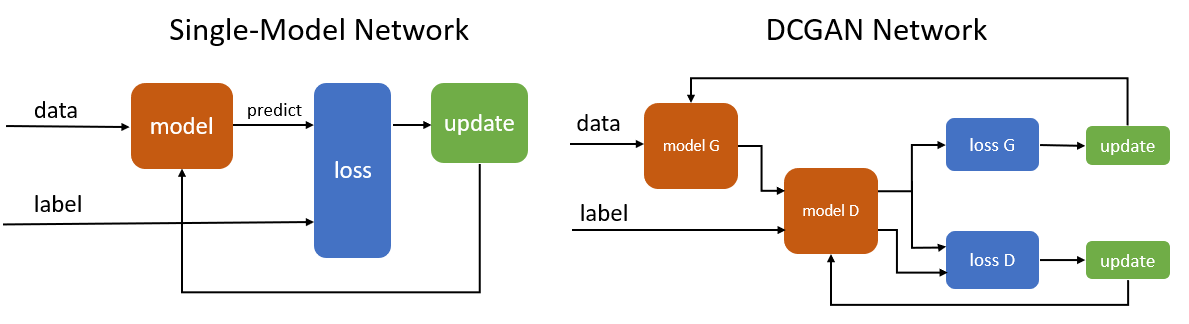

Here we show two Network example graphs to enhance the concept:

As the figure shows, models (orange) are only piece of a Network. It also includes other operations such as loss computation (blue) and update rules (green) that will be used during the training process.

TensorOp and its Children¶

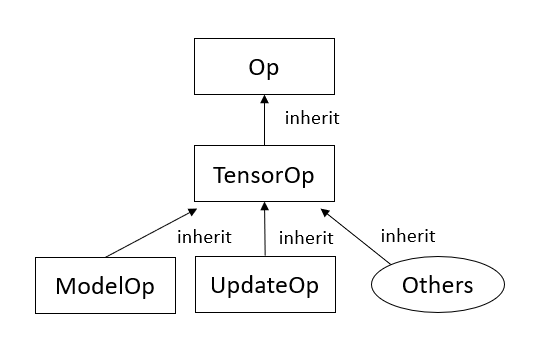

A Network is composed of basic units called TensorOps. All of the building blocks inside a Network should derive from the TensorOp base class. A TensorOp is a kind of Op and therefore follows the same rules described in Tutorial 3.

ModelOp¶

Any model instance created from fe.build (see Tutorial 5) needs to be packaged as a ModelOp such that it can interact with other components inside the Network API. The orange blocks in the first figure are ModelOps.

UpdateOp¶

FastEstimator use UpdateOp to associate the model with its loss. Unlike other Ops that use inputs and outputs for expressing their connections, UpdateOp uses the arguments loss, and model instead. The green blocks in the first figure are UpdateOps.

Others (loss, gradient, meta, etc.)¶

There are many ready-to-use TensorOps that users can directly import from fe.op.tensorop. Some examples include loss and gradient computation ops. There is also a category of TensorOp called MetaOp, which takes other Ops as input and generates more complex execution graphs (see Advanced Tutorial 9).

For all available Ops please check out the FastEstimator API.

Customize a TensorOp¶

FastEstimator provides flexibility that allows users to customize their own TensorOps by wrapping TensorFlow or PyTorch library calls, or by leveraging fe.backend API functions. Users only need to inherit the TensorOp class and overwrite its forward function.

If you want to customize a TensorOp by directly leveraging API calls from TensorFlow or PyTorch, please make sure that all of the TensorOps in the Network are backend-consistent. In other words, you cannot have TensorOps built specifically for TensorFlow and PyTorch in the same Network. Note that the ModelOp backend is determined by which library the model function uses, and so must be consistent with any custom TensorOp that you write.

Here we are going to demonstrate how to build a TensorOp that takes high dimensional inputs and returns an average scalar value. For more advanced tutorial of customizing a TensorOp please check out Advanced Tutorial 3.

Example Using TensorFlow¶

from fastestimator.op.tensorop import TensorOp

import tensorflow as tf

class ReduceMean(TensorOp):

def forward(self, data, state):

return tf.reduce_mean(data)

Example Using PyTorch¶

from fastestimator.op.tensorop import TensorOp

import torch

class ReduceMean(TensorOp):

def forward(self, data, state):

return torch.mean(data)

Example Using fe.backend¶

You don't need to worry about backend consistency if you import a FastEstimator-provided TensorOp, or customize your TensorOp using the fe.backend API. FastEstimator auto-magically handles everything for you.

from fastestimator.op.tensorop import TensorOp

from fastestimator.backend import reduce_mean

class ReduceMean(TensorOp):

def forward(self, data, state):

return reduce_mean(data)

Apphub Examples¶

You can find some practical examples of the concepts described here in the following FastEstimator Apphubs: