Running inference with transform method¶

Running inference means using a trained deep learning model to get a prediction from some input data. Users can use pipeline.transform and network.transform to feed the data forward and get the computed result in any operation mode. Here we are going to use an end-to-end example (the same example code from Tutorial 8) on MNIST image classification to demonstrate how to run inference.

We first train a deep leaning model with the following code:

import fastestimator as fe

from fastestimator.dataset.data import mnist

from fastestimator.trace.metric import Accuracy

from fastestimator.op.numpyop.univariate import ExpandDims, Minmax, CoarseDropout

from fastestimator.op.tensorop.loss import CrossEntropy

from fastestimator.op.tensorop.model import ModelOp, UpdateOp

from fastestimator.architecture.tensorflow import LeNet

train_data, eval_data = mnist.load_data()

test_data = eval_data.split(0.5)

model = fe.build(model_fn=LeNet, optimizer_fn="adam")

pipeline = fe.Pipeline(train_data=train_data,

eval_data=eval_data,

test_data=test_data,

batch_size=32,

ops=[ExpandDims(inputs="x", outputs="x"), #default mode=None

Minmax(inputs="x", outputs="x_out", mode=None),

CoarseDropout(inputs="x_out", outputs="x_out", mode="train")])

network = fe.Network(ops=[ModelOp(model=model, inputs="x_out", outputs="y_pred"), #default mode=None

CrossEntropy(inputs=("y_pred", "y"), outputs="ce", mode="!infer"),

UpdateOp(model=model, loss_name="ce", mode="train")])

estimator = fe.Estimator(pipeline=pipeline,

network=network,

epochs=1,

traces=Accuracy(true_key="y", pred_key="y_pred")) # default mode=[eval, test]

estimator.fit()

______ __ ______ __ _ __

/ ____/___ ______/ /_/ ____/____/ /_(_)___ ___ ____ _/ /_____ _____

/ /_ / __ `/ ___/ __/ __/ / ___/ __/ / __ `__ \/ __ `/ __/ __ \/ ___/

/ __/ / /_/ (__ ) /_/ /___(__ ) /_/ / / / / / / /_/ / /_/ /_/ / /

/_/ \__,_/____/\__/_____/____/\__/_/_/ /_/ /_/\__,_/\__/\____/_/

FastEstimator-Warn: No ModelSaver Trace detected. Models will not be saved.

FastEstimator-Warn: the key 'x' is being pruned since it is unused outside of the Pipeline. To prevent this, you can declare the key as an input of a Trace or TensorOp.

FastEstimator-Start: step: 1; logging_interval: 100; num_device: 0;

FastEstimator-Train: step: 1; ce: 2.2991223;

FastEstimator-Train: step: 100; ce: 1.9097762; steps/sec: 71.55;

FastEstimator-Train: step: 200; ce: 1.5507967; steps/sec: 71.29;

FastEstimator-Train: step: 300; ce: 1.1750956; steps/sec: 71.01;

FastEstimator-Train: step: 400; ce: 0.8331691; steps/sec: 52.24;

FastEstimator-Train: step: 500; ce: 1.0825467; steps/sec: 49.03;

FastEstimator-Train: step: 600; ce: 0.96594095; steps/sec: 54.5;

FastEstimator-Train: step: 700; ce: 0.7712606; steps/sec: 59.52;

FastEstimator-Train: step: 800; ce: 0.92222476; steps/sec: 64.22;

FastEstimator-Train: step: 900; ce: 0.6225736; steps/sec: 66.74;

FastEstimator-Train: step: 1000; ce: 1.065671; steps/sec: 66.21;

FastEstimator-Train: step: 1100; ce: 0.75097674; steps/sec: 66.05;

FastEstimator-Train: step: 1200; ce: 0.87365466; steps/sec: 66.14;

FastEstimator-Train: step: 1300; ce: 0.63997686; steps/sec: 44.37;

FastEstimator-Train: step: 1400; ce: 0.8474549; steps/sec: 47.43;

FastEstimator-Train: step: 1500; ce: 0.81277585; steps/sec: 39.03;

FastEstimator-Train: step: 1600; ce: 0.8506465; steps/sec: 49.19;

FastEstimator-Train: step: 1700; ce: 0.76338726; steps/sec: 61.44;

FastEstimator-Train: step: 1800; ce: 0.6283993; steps/sec: 65.14;

FastEstimator-Train: step: 1875; epoch: 1; epoch_time: 34.18 sec;

Eval Progress: 1/156;

Eval Progress: 52/156; steps/sec: 197.95;

Eval Progress: 104/156; steps/sec: 216.01;

Eval Progress: 156/156; steps/sec: 206.73;

FastEstimator-Eval: step: 1875; epoch: 1; accuracy: 0.951; ce: 0.16610472;

FastEstimator-Finish: step: 1875; model_lr: 0.001; total_time: 35.46 sec;

Let's create a customized print function to showcase our inferencing easier:

import numpy as np

import tensorflow as tf

import torch

def print_dict_but_value(data):

for key, value in data.items():

if isinstance(value, np.ndarray):

print("{}: ndarray with shape {}".format(key, value.shape))

elif isinstance(value, tf.Tensor):

print("{}: tf.Tensor with shape {}".format(key, value.shape))

elif isinstance(value, torch.Tensor):

print("{}: torch Tensor with shape {}".format(key, value.shape))

else:

print("{}: {}".format(key, value))

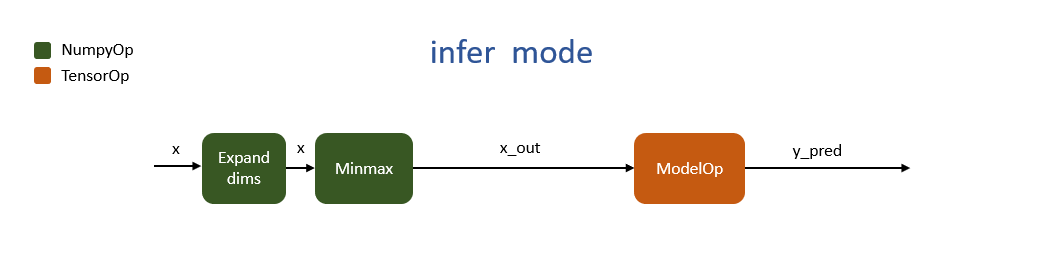

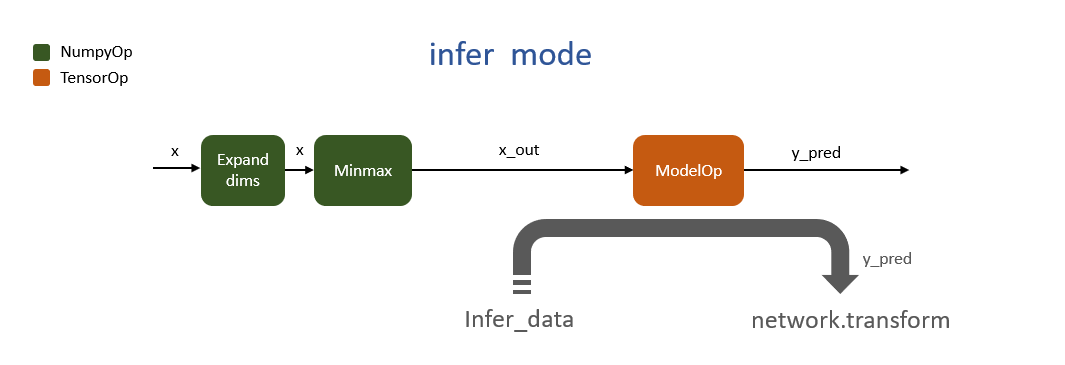

The following figure shows the complete execution graph (consisting Pipeline and Network) for the "infer" mode:

Our goal is to provide an input image "x" and get the prediction result "y_pred".

Pipeline.transform¶

The Pipeline object has a transform method that runs the pipeline graph ("x" to "x_out") when inference data (a dictionary of keys and values like {"x":image}), is inserted. The returned output will be the dictionary of computed results after applying all Pipeline Ops, where the dictionary values will be Numpy arrays.

Here we take eval_data's first image, package it into a dictionary, and then call `pipeline.transform`:

import copy

infer_data = {"x": copy.deepcopy(eval_data[0]["x"])}

print_dict_but_value(infer_data)

x: ndarray with shape (28, 28)

infer_data = pipeline.transform(infer_data, mode="infer")

print_dict_but_value(infer_data)

x: ndarray with shape (1, 28, 28, 1) x_out: ndarray with shape (1, 28, 28, 1)

Network.transform¶

We then use the network object to call the transform method that runs the network graph ("x_out" to "y_pred"). Much like with pipeline.transform, it will return it's Op outputs, though this time in the form of a dictionary of Tensors. The data type of the returned values depends on the backend of the network. It is tf.Tensor when using the TensorFlow backend and torch.Tensor with PyTorch. Please check out Tutorial 6 for more details about Network backends).

infer_data = network.transform(infer_data, mode="infer")

print_dict_but_value(infer_data)

x: tf.Tensor with shape (1, 28, 28, 1) x_out: tf.Tensor with shape (1, 28, 28, 1) y_pred: tf.Tensor with shape (1, 10)

Now we can visualize the input image and compare with its prediction class.

print("Predicted class is {}".format(np.argmax(infer_data["y_pred"])))

img = fe.util.BatchDisplay(image=infer_data["x"], title="x")

img.show()

Predicted class is 1